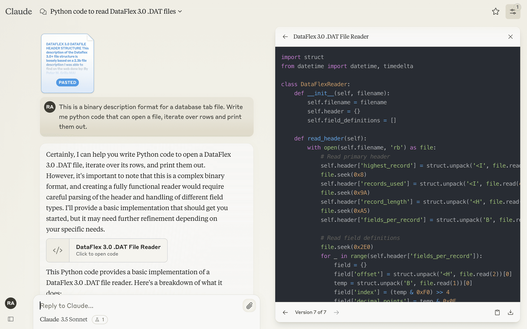

Apple Privacy Model and Confidential Computing

In its latest announcement, Apple has started to introduce more AI features to its ecosystem. One of the most interesting aspects was the concept of Private Cloud Compute.

Essentially, the iPhone will use a small and efficient LLM model to process all incoming requests. This LLM is not very powerful and comparable to modern 7B models. However, it is fast and will process all requests in a secure way - locally.

It becomes particularly interesting when the LLM-controlled system recognizes that it needs more computing power to process the request.

In this case, it has two options:

What is private cloud compute?

It is a protected Apple datacenter that uses their own chips to host powerful Large Language Models. The setup gives strong guarantees that your personal requests will be handled securely and nobody, not even Apple, will even see questions and answers.

This is done through a combination of special hardware, encryption, secured VM images and mutual attestation between the software and hardware. Ultimately, they do their best to make it very hard and expensive to break this setup even by Apple or governments.

Apple is all about consumer electronics, is there anything comparable for companies?

Yes, it does exist. It's called confidential computing. The concept has been around for some time (see the Confidential Computing Consortium), but has only recently been properly applied to GPUs by Nvidia. Nvidia introduced it in the Hopper architecture (H100 GPUs) and almost completely eliminated the performance penalty in the Blackwell architecture.

The concept is the same as Apple's PCC:

data is encrypted in transit and at rest

data is decrypted during the computation time

hardware and software are designed to make it impossible (really hard and expensive) to take a look at the data while it is decrypted.

Major cloud providers are already testing VMs with confidential GPU calculation (e.g. Microsoft Azure with H100 since 2023, Google Cloud with H100 since 2024).

This approach is interesting because it offers a third option to companies that need to build a secure LLM-driven system: