We’ve been benchmarking LLMs in business automation tasks for a year and a half already. It feels only appropriate that at the end of 2024, right when we are planning Benchmark v2, you will see our old benchmarks beaten. You can probably already guess the name of the winning model. But let’s not get ahead of ourselves.

Benchmarking Llama 3.3, Amazon Nova - nothing outstanding

Google Gemini 1206, Gemini 2.0 Flash Experimental - TOP 10

DeepSeek v3

Manual benchmark of OpenAI o1 pro - Gold Standard.

Base o1 (medium reasoning effort) - 3rd place

Our thoughts about recently announced o3

Our predictions for the 2025 landscape of LLM in business integration

Enterprise RAG Challenge will take place on February 27th

LLM Benchmarks | December 2024

The benchmarks evaluate the models in terms of their suitability for digital product development. The higher the score, the better.

☁️ - Cloud models with proprietary license

✅ - Open source models that can be run locally without restrictions

🦙 - Local models with Llama license

Can the model generate code and help with programming?

The estimated cost of running the workload. For cloud-based models, we calculate the cost according to the pricing. For on-premises models, we estimate the cost based on GPU requirements for each model, GPU rental cost, model speed, and operational overhead.

How well does the model support work with product catalogs and marketplaces?

How well can the model work with large documents and knowledge bases?

Can the model easily interact with external APIs, services and plugins?

How well can the model support marketing activities, e.g. brainstorming, idea generation and text generation?

How well can the model reason and draw conclusions in a given context?

The "Speed" column indicates the estimated speed of the model in requests per second (without batching). The higher the speed, the better.

| Model | Code | Crm | Docs | Integrate | Marketing | Reason | Final | cost | Speed |

|---|---|---|---|---|---|---|---|---|---|

| 1. GPT o1 pro (manual) ☁️ | 100 | 100 | 97 | 100 | 95 | 87 | 97 | 200.00 € | 1.00 rps |

| 2. GPT o1-preview v1/2024-09-12 ☁️ | 95 | 92 | 94 | 95 | 88 | 87 | 92 | 52.32 € | 0.08 rps |

| 3. GPT o1 v1/2024-12-17 ☁️ | 100 | 95 | 94 | 91 | 82 | 83 | 91 | 30.63 € | 0.17 rps |

| 4. GPT o1-mini v1/2024-09-12 ☁️ | 93 | 96 | 94 | 83 | 82 | 87 | 89 | 8.15 € | 0.16 rps |

| 5. GPT-4o v3/2024-11-20 ☁️ | 86 | 97 | 94 | 95 | 88 | 72 | 89 | 0.63 € | 1.14 rps |

| 6. GPT-4o v1/2024-05-13 ☁️ | 90 | 96 | 100 | 92 | 78 | 74 | 88 | 1.21 € | 1.44 rps |

| 7. Google Gemini 1.5 Pro v2 ☁️ | 86 | 97 | 94 | 99 | 78 | 74 | 88 | 1.00 € | 1.18 rps |

| 8. X-AI Grok 2 v2/1212 ⚠️ | 66 | 95 | 97 | 97 | 88 | 78 | 87 | 0.58 € | 0.99 rps |

| 9. GPT-4 Turbo v5/2024-04-09 ☁️ | 86 | 99 | 98 | 96 | 88 | 43 | 85 | 2.45 € | 0.84 rps |

| 10. Google Gemini 2.0 Flash Exp ☁️ | 63 | 96 | 100 | 100 | 82 | 62 | 84 | 0.03 € | 0.85 rps |

| 11. Google Gemini Exp 1121 ☁️ | 70 | 97 | 97 | 95 | 72 | 72 | 84 | 0.89 € | 0.49 rps |

| 12. GPT-4o v2/2024-08-06 ☁️ | 90 | 84 | 97 | 86 | 82 | 59 | 83 | 0.63 € | 1.49 rps |

| 13. Google Gemini 1.5 Pro 0801 ☁️ | 84 | 92 | 79 | 100 | 70 | 74 | 83 | 0.90 € | 0.83 rps |

| 14. Qwen 2.5 72B Instruct ⚠️ | 79 | 92 | 94 | 97 | 71 | 59 | 82 | 0.10 € | 0.66 rps |

| 15. Llama 3.1 405B Hermes 3🦙 | 68 | 93 | 89 | 98 | 88 | 53 | 81 | 0.54 € | 0.49 rps |

| 16. Claude 3.5 Sonnet v2 ☁️ | 82 | 97 | 93 | 84 | 71 | 57 | 81 | 0.95 € | 0.09 rps |

| 17. GPT-4 v1/0314 ☁️ | 90 | 88 | 98 | 73 | 88 | 45 | 80 | 7.04 € | 1.31 rps |

| 18. X-AI Grok 2 v1/1012 ⚠️ | 63 | 93 | 87 | 90 | 88 | 58 | 80 | 1.03 € | 0.31 rps |

| 19. GPT-4 v2/0613 ☁️ | 90 | 83 | 95 | 73 | 88 | 45 | 79 | 7.04 € | 2.16 rps |

| 20. DeepSeek v3 671B ⚠️ | 62 | 95 | 97 | 85 | 75 | 55 | 78 | 0.03 € | 0.49 rps |

| 21. GPT-4o Mini ☁️ | 63 | 87 | 80 | 73 | 100 | 65 | 78 | 0.04 € | 1.46 rps |

| 22. Claude 3.5 Sonnet v1 ☁️ | 72 | 83 | 89 | 87 | 80 | 58 | 78 | 0.94 € | 0.09 rps |

| 23. Claude 3 Opus ☁️ | 69 | 88 | 100 | 74 | 76 | 58 | 77 | 4.69 € | 0.41 rps |

| 24. Meta Llama3.1 405B Instruct🦙 | 81 | 93 | 92 | 75 | 75 | 48 | 77 | 2.39 € | 1.16 rps |

| 25. GPT-4 Turbo v4/0125-preview ☁️ | 66 | 97 | 100 | 83 | 75 | 43 | 77 | 2.45 € | 0.84 rps |

| 26. Google LearnLM 1.5 Pro Experimental ⚠️ | 48 | 97 | 85 | 96 | 64 | 72 | 77 | 0.31 € | 0.83 rps |

| 27. GPT-4 Turbo v3/1106-preview ☁️ | 66 | 75 | 98 | 73 | 88 | 60 | 76 | 2.46 € | 0.68 rps |

| 28. Google Gemini Exp 1206 ☁️ | 52 | 100 | 85 | 77 | 75 | 69 | 76 | 0.88 € | 0.16 rps |

| 29. Qwen 2.5 32B Coder Instruct ⚠️ | 43 | 94 | 98 | 98 | 76 | 46 | 76 | 0.05 € | 0.82 rps |

| 30. DeepSeek v2.5 236B ⚠️ | 57 | 80 | 91 | 80 | 88 | 57 | 75 | 0.03 € | 0.42 rps |

| 31. Meta Llama 3.1 70B Instruct f16🦙 | 74 | 89 | 90 | 75 | 75 | 48 | 75 | 1.79 € | 0.90 rps |

| 32. Google Gemini 1.5 Flash v2 ☁️ | 64 | 96 | 89 | 76 | 81 | 44 | 75 | 0.06 € | 2.01 rps |

| 33. Google Gemini 1.5 Pro 0409 ☁️ | 68 | 97 | 96 | 80 | 75 | 26 | 74 | 0.95 € | 0.59 rps |

| 34. Meta Llama 3 70B Instruct🦙 | 81 | 83 | 84 | 67 | 81 | 45 | 73 | 0.06 € | 0.85 rps |

| 35. GPT-3.5 v2/0613 ☁️ | 68 | 81 | 73 | 87 | 81 | 50 | 73 | 0.34 € | 1.46 rps |

| 36. Amazon Nova Lite ⚠️ | 67 | 78 | 74 | 94 | 62 | 62 | 73 | 0.02 € | 2.19 rps |

| 37. Mistral Large 123B v2/2407 ☁️ | 68 | 79 | 68 | 75 | 75 | 70 | 72 | 0.57 € | 1.02 rps |

| 38. Google Gemini Flash 1.5 8B ☁️ | 70 | 93 | 78 | 67 | 76 | 48 | 72 | 0.01 € | 1.19 rps |

| 39. Google Gemini 1.5 Pro 0514 ☁️ | 73 | 96 | 79 | 100 | 25 | 60 | 72 | 1.07 € | 0.92 rps |

| 40. Google Gemini 1.5 Flash 0514 ☁️ | 32 | 97 | 100 | 76 | 72 | 52 | 72 | 0.06 € | 1.77 rps |

| 41. Google Gemini 1.0 Pro ☁️ | 66 | 86 | 83 | 79 | 88 | 28 | 71 | 0.37 € | 1.36 rps |

| 42. Meta Llama 3.2 90B Vision🦙 | 74 | 84 | 87 | 77 | 71 | 32 | 71 | 0.23 € | 1.10 rps |

| 43. GPT-3.5 v3/1106 ☁️ | 68 | 70 | 71 | 81 | 78 | 58 | 71 | 0.24 € | 2.33 rps |

| 44. Claude 3.5 Haiku ☁️ | 52 | 80 | 72 | 75 | 75 | 68 | 70 | 0.32 € | 1.24 rps |

| 45. Meta Llama 3.3 70B Instruct🦙 | 74 | 78 | 74 | 77 | 71 | 46 | 70 | 0.10 € | 0.71 rps |

| 46. GPT-3.5 v4/0125 ☁️ | 63 | 87 | 71 | 77 | 78 | 43 | 70 | 0.12 € | 1.43 rps |

| 47. Cohere Command R+ ☁️ | 63 | 80 | 76 | 72 | 70 | 58 | 70 | 0.83 € | 1.90 rps |

| 48. Mistral Large 123B v3/2411 ☁️ | 68 | 75 | 64 | 76 | 82 | 51 | 70 | 0.56 € | 0.66 rps |

| 49. Qwen1.5 32B Chat f16 ⚠️ | 70 | 90 | 82 | 76 | 78 | 20 | 69 | 0.97 € | 1.66 rps |

| 50. Gemma 2 27B IT ⚠️ | 61 | 72 | 87 | 74 | 89 | 32 | 69 | 0.07 € | 0.90 rps |

| 51. Mistral 7B OpenChat-3.5 v3 0106 f16 ✅ | 68 | 87 | 67 | 74 | 88 | 25 | 68 | 0.32 € | 3.39 rps |

| 52. Meta Llama 3 8B Instruct f16🦙 | 79 | 62 | 68 | 70 | 80 | 41 | 67 | 0.32 € | 3.33 rps |

| 53. Gemma 7B OpenChat-3.5 v3 0106 f16 ✅ | 63 | 67 | 84 | 58 | 81 | 46 | 67 | 0.21 € | 5.09 rps |

| 54. GPT-3.5-instruct 0914 ☁️ | 47 | 92 | 69 | 69 | 88 | 33 | 66 | 0.35 € | 2.15 rps |

| 55. Amazon Nova Pro ⚠️ | 64 | 78 | 82 | 79 | 52 | 41 | 66 | 0.22 € | 1.34 rps |

| 56. GPT-3.5 v1/0301 ☁️ | 55 | 82 | 69 | 81 | 82 | 26 | 66 | 0.35 € | 4.12 rps |

| 57. Llama 3 8B OpenChat-3.6 20240522 f16 ✅ | 76 | 51 | 76 | 65 | 88 | 38 | 66 | 0.28 € | 3.79 rps |

| 58. Mistral 7B OpenChat-3.5 v1 f16 ✅ | 58 | 72 | 72 | 71 | 88 | 33 | 66 | 0.49 € | 2.20 rps |

| 59. Mistral 7B OpenChat-3.5 v2 1210 f16 ✅ | 63 | 73 | 72 | 66 | 88 | 30 | 65 | 0.32 € | 3.40 rps |

| 60. Qwen 2.5 7B Instruct ⚠️ | 48 | 77 | 80 | 68 | 69 | 47 | 65 | 0.07 € | 1.25 rps |

| 61. Starling 7B-alpha f16 ⚠️ | 58 | 66 | 67 | 73 | 88 | 34 | 64 | 0.58 € | 1.85 rps |

| 62. Mistral Nemo 12B v1/2407 ☁️ | 54 | 58 | 51 | 99 | 75 | 49 | 64 | 0.03 € | 1.22 rps |

| 63. Meta Llama 3.2 11B Vision🦙 | 70 | 71 | 65 | 70 | 71 | 36 | 64 | 0.04 € | 1.49 rps |

| 64. Llama 3 8B Hermes 2 Theta🦙 | 61 | 73 | 74 | 74 | 85 | 16 | 64 | 0.05 € | 0.55 rps |

| 65. Claude 3 Haiku ☁️ | 64 | 69 | 64 | 75 | 75 | 35 | 64 | 0.08 € | 0.52 rps |

| 66. Yi 1.5 34B Chat f16 ⚠️ | 47 | 78 | 70 | 74 | 86 | 26 | 64 | 1.18 € | 1.37 rps |

| 67. Liquid: LFM 40B MoE ⚠️ | 72 | 69 | 65 | 63 | 82 | 24 | 63 | 0.00 € | 1.45 rps |

| 68. Meta Llama 3.1 8B Instruct f16🦙 | 57 | 74 | 62 | 74 | 74 | 32 | 62 | 0.45 € | 2.41 rps |

| 69. Qwen2 7B Instruct f32 ⚠️ | 50 | 81 | 81 | 61 | 66 | 31 | 62 | 0.46 € | 2.36 rps |

| 70. Claude 3 Sonnet ☁️ | 72 | 41 | 74 | 74 | 78 | 28 | 61 | 0.95 € | 0.85 rps |

| 71. Mistral Small v3/2409 ☁️ | 43 | 75 | 71 | 74 | 75 | 26 | 61 | 0.06 € | 0.81 rps |

| 72. Mistral Pixtral 12B ✅ | 53 | 69 | 73 | 63 | 64 | 40 | 60 | 0.03 € | 0.83 rps |

| 73. Mixtral 8x22B API (Instruct) ☁️ | 53 | 62 | 62 | 97 | 75 | 7 | 59 | 0.17 € | 3.12 rps |

| 74. Anthropic Claude Instant v1.2 ☁️ | 58 | 75 | 65 | 77 | 65 | 16 | 59 | 2.10 € | 1.49 rps |

| 75. Codestral Mamba 7B v1 ✅ | 53 | 66 | 51 | 97 | 71 | 17 | 59 | 0.30 € | 2.82 rps |

| 76. Inflection 3 Productivity ⚠️ | 46 | 59 | 39 | 70 | 79 | 61 | 59 | 0.92 € | 0.17 rps |

| 77. Anthropic Claude v2.0 ☁️ | 63 | 52 | 55 | 67 | 84 | 34 | 59 | 2.19 € | 0.40 rps |

| 78. Cohere Command R ☁️ | 45 | 66 | 57 | 74 | 84 | 27 | 59 | 0.13 € | 2.50 rps |

| 79. Amazon Nova Micro ⚠️ | 58 | 68 | 64 | 71 | 59 | 31 | 59 | 0.01 € | 2.41 rps |

| 80. Qwen1.5 7B Chat f16 ⚠️ | 56 | 81 | 60 | 56 | 60 | 36 | 58 | 0.29 € | 3.76 rps |

| 81. Mistral Large v1/2402 ☁️ | 37 | 49 | 70 | 83 | 84 | 25 | 58 | 0.58 € | 2.11 rps |

| 82. Microsoft WizardLM 2 8x22B ⚠️ | 48 | 76 | 79 | 59 | 62 | 22 | 58 | 0.13 € | 0.70 rps |

| 83. Qwen1.5 14B Chat f16 ⚠️ | 50 | 58 | 51 | 72 | 84 | 22 | 56 | 0.36 € | 3.03 rps |

| 84. MistralAI Ministral 8B ✅ | 56 | 55 | 41 | 82 | 68 | 30 | 55 | 0.02 € | 1.02 rps |

| 85. Anthropic Claude v2.1 ☁️ | 29 | 58 | 59 | 78 | 75 | 32 | 55 | 2.25 € | 0.35 rps |

| 86. Mistral 7B OpenOrca f16 ☁️ | 54 | 57 | 76 | 36 | 78 | 27 | 55 | 0.41 € | 2.65 rps |

| 87. MistralAI Ministral 3B ✅ | 50 | 48 | 39 | 89 | 60 | 41 | 54 | 0.01 € | 1.02 rps |

| 88. Llama2 13B Vicuna-1.5 f16🦙 | 50 | 37 | 55 | 62 | 82 | 37 | 54 | 0.99 € | 1.09 rps |

| 89. Mistral 7B Instruct v0.1 f16 ☁️ | 34 | 71 | 69 | 63 | 62 | 23 | 54 | 0.75 € | 1.43 rps |

| 90. Meta Llama 3.2 3B🦙 | 52 | 71 | 66 | 71 | 44 | 14 | 53 | 0.01 € | 1.25 rps |

| 91. Google Recurrent Gemma 9B IT f16 ⚠️ | 58 | 27 | 71 | 64 | 56 | 23 | 50 | 0.89 € | 1.21 rps |

| 92. Codestral 22B v1 ✅ | 38 | 47 | 44 | 84 | 66 | 13 | 49 | 0.06 € | 4.03 rps |

| 93. Qwen: QwQ 32B Preview ⚠️ | 43 | 32 | 74 | 52 | 48 | 40 | 48 | 0.05 € | 0.63 rps |

| 94. Llama2 13B Hermes f16🦙 | 50 | 24 | 37 | 75 | 60 | 42 | 48 | 1.00 € | 1.07 rps |

| 95. IBM Granite 34B Code Instruct f16 ☁️ | 63 | 49 | 34 | 67 | 57 | 7 | 46 | 1.07 € | 1.51 rps |

| 96. Meta Llama 3.2 1B🦙 | 32 | 40 | 33 | 53 | 68 | 51 | 46 | 0.02 € | 1.69 rps |

| 97. Mistral Small v2/2402 ☁️ | 33 | 42 | 45 | 88 | 56 | 8 | 46 | 0.06 € | 3.21 rps |

| 98. Mistral Small v1/2312 (Mixtral) ☁️ | 10 | 67 | 63 | 65 | 56 | 8 | 45 | 0.06 € | 2.21 rps |

| 99. DBRX 132B Instruct ⚠️ | 43 | 39 | 43 | 74 | 59 | 10 | 45 | 0.26 € | 1.31 rps |

| 100. NVIDIA Llama 3.1 Nemotron 70B Instruct🦙 | 68 | 54 | 25 | 72 | 28 | 21 | 45 | 0.09 € | 0.53 rps |

| 101. Mistral Medium v1/2312 ☁️ | 41 | 43 | 44 | 59 | 62 | 12 | 44 | 0.81 € | 0.35 rps |

| 102. Microsoft WizardLM 2 7B ⚠️ | 53 | 34 | 42 | 66 | 53 | 13 | 43 | 0.02 € | 0.89 rps |

| 103. Llama2 13B Puffin f16🦙 | 37 | 15 | 44 | 67 | 56 | 39 | 43 | 4.70 € | 0.23 rps |

| 104. Mistral Tiny v1/2312 (7B Instruct v0.2) ☁️ | 22 | 47 | 59 | 53 | 62 | 8 | 42 | 0.05 € | 2.39 rps |

| 105. Gemma 2 9B IT ⚠️ | 45 | 25 | 47 | 36 | 68 | 13 | 39 | 0.02 € | 0.88 rps |

| 106. Meta Llama2 13B chat f16🦙 | 22 | 38 | 17 | 65 | 75 | 6 | 37 | 0.75 € | 1.44 rps |

| 107. Mistral 7B Zephyr-β f16 ✅ | 37 | 34 | 46 | 62 | 29 | 4 | 35 | 0.46 € | 2.34 rps |

| 108. Meta Llama2 7B chat f16🦙 | 22 | 33 | 20 | 62 | 50 | 18 | 34 | 0.56 € | 1.93 rps |

| 109. Mistral 7B Notus-v1 f16 ⚠️ | 10 | 54 | 25 | 60 | 48 | 4 | 33 | 0.75 € | 1.43 rps |

| 110. Orca 2 13B f16 ⚠️ | 18 | 22 | 32 | 29 | 67 | 20 | 31 | 0.95 € | 1.14 rps |

| 111. Mistral 7B Instruct v0.2 f16 ☁️ | 11 | 30 | 54 | 25 | 58 | 8 | 31 | 0.96 € | 1.12 rps |

| 112. Mistral 7B v0.1 f16 ☁️ | 0 | 9 | 48 | 63 | 52 | 12 | 31 | 0.87 € | 1.23 rps |

| 113. Google Gemma 2B IT f16 ⚠️ | 33 | 28 | 16 | 47 | 15 | 20 | 27 | 0.30 € | 3.54 rps |

| 114. Microsoft Phi 3 Medium 4K Instruct 14B f16 ⚠️ | 5 | 34 | 30 | 32 | 47 | 8 | 26 | 0.82 € | 1.32 rps |

| 115. Orca 2 7B f16 ⚠️ | 22 | 0 | 26 | 26 | 52 | 4 | 22 | 0.78 € | 1.38 rps |

| 116. Google Gemma 7B IT f16 ⚠️ | 0 | 0 | 0 | 6 | 62 | 0 | 11 | 0.99 € | 1.08 rps |

| 117. Meta Llama2 7B f16🦙 | 0 | 5 | 22 | 3 | 28 | 2 | 10 | 0.95 € | 1.13 rps |

| 118. Yi 1.5 9B Chat f16 ⚠️ | 0 | 4 | 29 | 17 | 0 | 8 | 10 | 1.41 € | 0.76 rps |

Benchmarking Llama 3.3, Amazon Nova, Gemini 1206

We’ll cover these models in one go.

Meta Llama 3.3 70B Instruct - 45th place.

Llama 3.3 70B Instruct held 40th at the moment of the release, but since then a few other better models showed up. This is the common pattern - if a company doesn’t release better models, it will get pushed down by the competitors pretty fast.

Llama 3.3 70B has a decent Reason, just below Llama 405B and older Llama 3.1 70B, however it doesn’t follow that well instructions in business tasks. This is a typical problem for Llama models. It would normally be fixed by good fine tunes, except that the market started realising that ROI of fine-tuning models in practice is lower than it seemed. So we don’t expect any changes in its position anytime soon.

Amazon Nova - bad

Amazon has released their own versions of LLMs: Amazon Nova Micro, Lite and Pro. They are very cheap to run and quite useless, taking 36, 55 and 79 places.

Do you know what the silver lining here is? These bad models achieve the quality of GPT-3.5 which was ground-breaking back in the day. So the models aren’t that bad, the progress is just making us move the goalposts really fast without noticing it.

Google Gemini Experimental 1206 and 2.0 Flash Experimental

Google Gemini Experimental 1206 - not so good

Google Gemini Experimental took 28th place, which is much worse than Google Gemini 1.5 Pro v2. The latter is really good, if you manage to get over all the Google quirks. However, that’s acceptable because 1206 is only an experimental model, not an official release.

Yet, this matches the quality level of some GPT-4 Turbo versions!

Google Gemini 2.0 Flash Experimental is a more interesting model

It is still experimental, but it made its way into the TOP-10 of our benchmark!

Compared to the previous version of Flash (Gemini 1.5 Flash), this experimental model improved its reasoning capability from 44 to 62, while increasing the overall score from 75 to 84.

Google Gemini 2.0 Flash also pays a lot of attention to instructions (which is really important for Structured Output / Custom Chain of Thought patterns) and has achieved a perfect 100 score in Docs and Integrate. It is the first model to do so.

Google Deepmind writes that the model was created for automatisation and "agentic experiences" —whatever that means — it has 1M input context.

This model also potentially has the lowest usage cost within the top 19 models. 20th model is another cost-wise contender DeepSeek v3 671B.

“Potentially”, because the price for Google Gemini 2.0 Flash is not known at this moment, yet. So we assume it’s the same as Flash 1.5.

Google continues to surprise us in a good way, continuously releasing new models that make it into the TOP-10. This has a side effect of pushing old favourites (Mistral and Anthropic) down from the spotlight of the fame. This doesn’t make these models worse, on the contrary, it means that we are getting more options to choose from!

Deep Seek v3

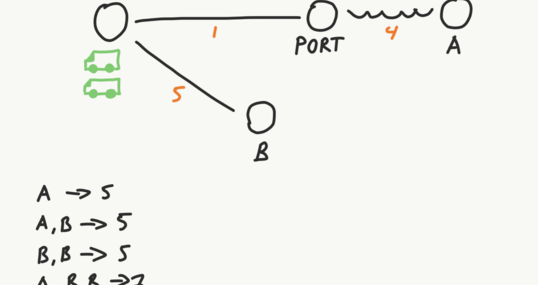

DeepSeek v3 is a recently released Mixture-of-Experts (MoE) language model with 671B total parameters. It tries to be efficient inference-wise, so only 37B parameters are activated for each token. This reflects in the cost of running this model. This model is locally-capable (you can download it and run on your servers, provided, there are enough GPUs to host the weights).

DeepSeek v3 has improved over the scores of its predecessor DeepSeek v2.5 (now at TOP 30). It can solve business automation tasks in CRM category at 97 score (from 80). Ability to solve software engineering tasks improved from 57 to 62 (although the model still has a long way to go to catch up with good old Sonnet 3.5 Claude v2 at 82).

Even though DeepSeek v3 activates only 37B parameters per token, this doesn’t make it easier to launch the model locally. Mixture of Experts (MoE) means “faster inference”, not “lower VRAM requirements”. We would need something like 8xH200 GPUs to run model inference locally. This makes the model not so suitable for the local use.

What is peculiar about DeepSeek v3 - it is the first model to use FP8 mixed-precision training framework. This approach enables training new LLM models faster, cheaper and with lower VRAM requirements. This should potentially also lead to better out-of-the-box quantisation at inference. Let’s see if that technique will help to create more of small and powerful local models.

Manual benchmark of OpenAI o1 pro - Gold Standard

Let’s move towards the hero of this LLM Benchmark - o1 pro from the OpenAI. But before we proceed, there is an important caveat. There are 6 different flavours of OpenAI o1 model, don’t mix them up!

o1-mini - the smallest and cheapest of all reasoning models. It is available both in ChatGPT UI and over the API.

o1-preview - really capable reasoning version that was previously available in ChatGPT UI. It is no longer available there, o1 base replaced it. o1-preview is still available directly through the API.

o1 - this is the model that replaces o1-preview in ChatGPT UI. This version has by default more limited reasoning capability in the UI, making it less capable than o1-preview. This model isn’t widely accessible via the API, yet (only for Tier-5 accounts). o1 has three possible reasoning effort configurations in the API: high, medium and low. The higher the effort, the more expensive and capable the model becomes.

o1 - pro - this is the most powerful model of them all. It is available in ChatGPT UI for the monthly cost of 200$. It isn’t available via the API, yet.

There you have it - 4 flavours of o1 model, plus 2 additional configurations (high and low) for the o1 model.

This section focuses solely on the o1 pro. This model, as an exception, was not tested via the API (because it isn’t available, yet), but manually through the ChatGPT UI. Here is how it was done:

We took the results from the o1-mini benchmark and selected only the tasks where o1-mini made mistakes. Since o1 pro is way more capable, we assumed that if o1-mini got something right, o1 pro would also answer correctly. This way we didn’t have to run manually a few hundred tasks from the entire benchmark, only a few dozen.

We made sure to disable custom instructions in the ChatGPT UI. Local memory was also disabled.

We converted benchmark requests from API format to a textual format and launched them manually by copy-pasting.

This is where we’ve encountered the first gotchas.

First of all, o1 pro is embedded deeply in the ChatGPT UI, which tries to be convenient. For example, if task has to return YAML, it will get formatted as markdown, breaking the response completely. We had to fix answers like that manually.

Second, we have historically formatted few-shot samples like this:

System: Task explanation

User: sample request 1

Assistant: sample response 1

User: sample request 2

Assistant: sample response 2

User: real requestWe can’t do role-based prompting in the ChatGPT UI. Besides, System prompt isn’t even accessible in o1 lines of models to prevent reasoning tokens from leaking to the end-users (they are generated by the models without alignment and guardrails). The model isn’t only designed to protect its System prompt (it is also called as Platform prompt in the latest documentation), it also tries to work with the user via the dialogue.

This lead to an interesting outcome: the model gave lower priority to the System instructions and tried to find patterns in past conversations with the user. It could occasionally find them and arrive to the incorrect conclusions, leading in low integrate scores.

So we had to start formatting o1 pro tasks like this:

# Task

Task explanation

## Example

User: sample request 1

Assistant: sample response 1

## Example

User: sample request 2

Assistant: sample response 2

# Request

real requestHaving that said, what were the results?

o1 pro reached the very top of our benchmark, achieving almost perfect 97 (remaining 3 points could be attributed to ambiguous tasks in our benchmark).

Within our benchmark that measures capabilities of LLM models in business automation tasks this model is like a gold ingot. It is perfect and expensive. It is an overkill.

As always, these are the good news for two reasons:

We have clearly arrived to the point that LLMs can easily solve all tasks in our business automation challenges (from 18 months ago). Now we just need to wait for comparable models that are cheaper to run.

While developing the second version of LLM benchmark, we can keep current o1 pro capabilities in sights and formulate new ones tasks that will challenge even o1 pro. This will make the evaluation complexity curve more smooth, helping the entire benchmark to be more representative in tasks of business automation.

Benchmark of o1 (base) - 🥉TOP-3

Do you remember the disclaimer about different flavours of o1 models above?

This benchmark focuses on o1 (base) model that was tested through the API with reasoning_effort of medium. This is not necessarily the same model configuration as what is available via the ChatGPT UI.

The difference is not only in the different compute limitations, there also is a new chain of command (rules of robotics, as implemented by OpenAI for the reasoning models): Platform > Developer > User > Tool.

O1 base model was tested automatically via the API, just like all the other models (except o1 pro). It scored the 3rd place - slightly better than o1-mini, slightly worse than o1-preview.

reasoning_effort was set to medium (default value) and max_tokens were set to 25000(as per OpenAI recommendation).

What is peculiar, o1 base takes the 3rd place both in capability and in cost. This makes for a very interesting curve: at the very top, reasoning capability is a function of a cost.

o1-preview works better than o1 base, because it generates more tokens, but the result is also better. o1 pro just things deeper and more throughly in general.

This trend also backs up recent research from the Hugging Face on Scaling Test-time compute. It is about improving quality of 3B model to the level of 70B via spending more time on reasoning (and generating possible answers). So we can probably expect more LLM providers to start offering smarter models for an extra price (you pay for the reasoning tokens).

Perhaps afterwards there will also be new ways to launch intensive reasoning locally as well (similarly how it happened with local structured outputs).

What about recently announced o3?

Recently OpenAI has announced its new model o3, which solves tasks from ARC-AGI dataset really well.

Why is there o1 and o3 but no o2? Naming conflict with O2 telecom company could be the root cause.

What is ARC-AGI? It is a set of challenges that attempt to compare human intellect with machine. The website claims that solving ARC-AGI is even more outstanding than the invention of a transformer architecture.

Below is an example of one benchmark. To solve it, machine needs to figure out the rules and produce pixel-perfect response.

As the story goes, o3 was able to solve almost all tasks from this benchmark. This is something that wasn’t considered to be possible before.

This makes o3 theoretically the best LLM model. However, we believe that it wouldn’t have a noticeable impact on business automation tasks in companies any time soon. There is a catch - costs.

Take a look at the chart below from the ARC-AGI announcement. It maps performance of different models vs the cost of solving a single task.

Cost scale is logarithmic. So the cost of solving a single task with O3 HIGH (Tuned) was around 3200 USD per a single pixel-perfect response.

We mentioned earlier that o1 is the gold standard—it’s perfect in business automation and already too expensive to be practical for the most of the cases. o3 pushes the envelope even further.

Yet, adoption for LLM models works out well in cases where we gain a lot of value from automation. This business value is currently achievable in the mundane tasks where LLMs are cheaper, more patient and accurate than humans. These are simple and easily verifiable tasks like data extraction from the documents, request classification, code generation, review of standard contracts etc.

The real issue here is cost-efficiency. o3 from the OpenAI is not cheap at all, so it will not have a big impact. However, it might pave the way for improving quality of the other models, e.g. via the generation of high quality synthetic data that could be used for training.

Our Predictions for 2025

These are the opinionated predictions, based on the patterns observed among our AI Cases.

Hype of fine-tuning of LLMs will die out.

Fine-tuning of LLMs was frequently mentioned as a way “to train LLM on your company data” or “teach LLM new tricks”. Even OpenAI offers fine-tuning as a service.

In theory everything looks simple - just feed the LLM with a lot of documents, and it will learn from them. What happens in practice: instead of getting better accuracy, teams suddenly end up with models that hallucinate a lot more. Most of the times they underestimate the complexity of preparing proper data and following the training regimen.

Among our AI Cases, only one project has successfully fine-tuned LLM (we are not counting embedding models, of course). They had a lot of carefully prepared data for the task, still it took them quite a few iterations.

We believe that in the year 2025 both the businesses and software consultants/vendors will start to realise real complexity and cost of fine-tuning LLMs. They will also understand the power that a good foundational LLM can already provide out-of-the-box, especially if one leverages powerful inference patterns like Structured Outputs and Custom Chain-of-Thought.

Hype of Autonomous Agents will start fading away

We aren’t claiming that autonomous agents are impossible. If enough effort is invested, it is possible to deliver something like that.

However, the concept of an autonomous agent is not very practical. It is a complex product to design, build and integrate, while ensuring predictable quality.

Please let us stress one fact: agents are not technically complex. In essence, it’s just a series of prompts that pass control and context to each other, while using external tools. Yet, due to the shape of the product, it is really hard for humans to setup cost-efficient process of delivering trustworthy agent-driven products. In practice things just start falling apart, budgets run out before the systems stop hallucinating.

Vendors will continue talking about agents in 2025 and selling “enterprise-ready agent frameworks” (they have investments to recoup), but we believe that the hype will start fading.

Will there be an AGI in 2025? What about LLM trends?

There will be no AGI in 2025. Generic intelligence is even a harder task to solve, especially since we are getting particularly adept in moving the goalposts of “what is an AGI?”. As the creators of ARC-AGI have written: “You'll know AGI is here when the exercise of creating tasks that are easy for regular humans but hard for AI becomes simply impossible.” And they are just working on v2 of their benchmark.

Still, a lot of companies will continue to try compete with OpenAI for the spot of the most intelligent model. There is even a chance that Google will eventually dethrone OpenAI.

Just look at the trends of quality increases among models in 2024 (by different providers and cost categories):

As a new shortcut for improving model reasoning, we believe, more AI vendors will start providing reasoning capabilities, similar to o1 models. This will be a temporary workaround to boost model accuracy quick and without heavy investments: just throw more compute, let the model think longer before answering and charge more for the API.

However, we also believe, that the upcoming hype of providing smart reasoning models that are outrageously expensive will also start fading. It is just not very practical.

We also believe that AI vendors will start exposing more advanced features in their LLMs. Everybody already has large context and Prompt Caching (which already makes dedicated RAG impractical in many cases). But there still are powerful features that are not rolled out widely:

Structured Outputs (constrained decoding) - as a powerful way to increase quality of LLM answers in complex scenarios, especially when coupled with custom chain-of-thoughts. At the moment only OpenAI has a usable implementation. Google is still catching up with its mildly unusable controlled generation that uses VertexAI API format under the hood.

Document reasoning with VLMs. Latest LLMs are no longer text-only, they can also accept images or audio. This allows to handle complex documents with tables and charts. Anthropic has already a flavour of this capability - it internally sends documents both as text and image to its Sonnet 3.5 model, which works as Vision-Language Model (VLM).

Native integration of LLMs with the other tools - similar to how OpenAI has Assistants APIs that allow its LLMs to use local RAG and code execution sandbox. Anthropic is also trying to enter the playing field by introducing Model Context Protocol (a standard for connecting LLMs to data sources and external tools, inspired by Language Server Protocol).

We also expect that AI vendors will try to include unique features into their LLM APIs in order to attract users. There will be some standardisation (e.g. Google is testing VertexAI access via OpenAI libraries) and non-conformity at the same (e.g. compare how prompt caching works differently at Google, OpenAI and Anthropic).

The whole situation is going to resemble browser wars. Ultimately standards will start emerging, but until then we can expect a lot of quirks, frequent migration pains and evolving features.

Fortunately, if we look beyond a single provider at the market situation in general - bigger patterns start emerging. By optimising for the generic trends of the AI market, we can reduce the risk of making costly decisions and heading towards the dead-ends.

One last bit of the news for the next year. We are planning to run the second round of our Enterprise RAG Challenge at the end of February!

Enterprise RAG Challenge is a friendly competition where we compare how different RAG architectures answer questions about business documents.

We had the first round of this challenge last summer. Results were impressive - just with 16 participating teams we were able to compare different RAG architectures and discover the power of using structured outputs in business tasks.

The second round is scheduled for February 27th. Mark your calendars!

Transform Your Digital Projects with the Best AI Language Models!

Discover the transformative power of the best LLMs and revolutionize your digital products with AI! Stay future-focused, boost efficiency, and gain a clear competitive edge. We help you elevate your business value to the next level.

Martin Warnung

martin.warnung@timetoact.at

ChatGPT & Co: LLM Benchmarks for October

ChatGPT & Co: LLM Benchmarks for September

ChatGPT & Co: LLM Benchmarks for November

ChatGPT & Co: LLM Benchmarks for January

LLM Performance Series: Batching

Strategic Impact of Large Language Models

Open-sourcing 4 solutions from the Enterprise RAG Challenge

AI for social good

8 tips for developing AI assistants

Part 1: Data Analysis with ChatGPT

AIM Hackathon 2024: Sustainability Meets LLMs

Creating a Social Media Posts Generator Website with ChatGPT

Common Mistakes in the Development of AI Assistants

AI Contest - Enterprise RAG Challenge

Second Place - AIM Hackathon 2024: Trustpilot for ESG

Let's build an Enterprise AI Assistant

So You are Building an AI Assistant?

The Intersection of AI and Voice Manipulation

Part 4: Save Time and Analyze the Database File

Part 3: How to Analyze a Database File with GPT-3.5

SAM Wins First Prize at AIM Hackathon

Third Place - AIM Hackathon 2024: The Venturers

AI Workshops for Companies

Google Workspace: AI-supported work for every company

Crisis management & building a sustainable future with AI

Expanding Opportunities with Generative AI

The ROI of Gen AI

License Management – Everything you need to know

Graph Technology

License Plate Detection for Precise Car Distance Estimation

IBM Cloud Pak for Data Accelerator

Responsible AI: A Guide to Ethical AI Development

Google Threat Intelligence

5 Inconvenient Questions when hiring an AI company

Database Analysis Report

Managed service support for optimal license management

AI & Data Science

Interactive online portal identifies suitable employees

Standardized data management creates basis for reporting

Using NLP libraries for post-processing

Artificial Intelligence in Treasury Management

Using a Skill/Will matrix for personal career development

Building A Shell Application for Micro Frontends | Part 4

Flexibility in the data evaluation of a theme park

Automated Planning of Transport Routes

Advanced Admin Trial

Make Your Value Stream Visible Through Structured Logging

Introduction to Functional Programming in F# – Part 10

Process Pipelines

Introduction to Functional Programming in F# – Part 11

Introduction to Functional Programming in F# – Part 12

Inbox helps to clear the mind

Introduction to Partial Function Application in F#

Boosting speed of scikit-learn regression algorithms

CSS :has() & Responsive Design

How we discover and organise domains in an existing product

Running Hybrid Workshops

Creating a Cross-Domain Capable ML Pipeline

State of Fast Feedback in Data Science Projects

Part 2: Detecting Truck Parking Lots on Satellite Images

Part 1: Detecting Truck Parking Lots on Satellite Images

5 lessons from running a (remote) design systems book club

Introduction to Functional Programming in F# – Part 2

Introduction to Functional Programming in F# – Part 3

Introduction to Functional Programming in F#

Machine Learning Pipelines

Revolutionizing the Logistics Industry

Event Sourcing with Apache Kafka

Part 2: Data Analysis with powerful Python

ADRs as a Tool to Build Empowered Teams

Why Was Our Project Successful: Coincidence or Blueprint?

The Power of Event Sourcing

Tracing IO in .NET Core

Consistency and Aggregates in Event Sourcing

My Workflows During the Quarantine

Learning + Sharing at TIMETOACT GROUP Austria

My Weekly Shutdown Routine

Innovation Incubator at TIMETOACT GROUP Austria

Innovation Incubator Round 1

Announcing Domain-Driven Design Exercises

Introduction to Functional Programming in F# – Part 4

Introduction to Functional Programming in F# – Part 6

So, I wrote a book

7 Positive effects of visualizing the interests of your team

How to gather data from Miro

Designing and Running a Workshop series: An outline

Designing and Running a Workshop series: The board

Composite UI with Design System and Micro Frontends

Building and Publishing Design Systems | Part 2